在这篇文章中,我会介绍如何用TensorFlow实现下面4个模型:

- ResNet-18

- ResNet-18 无跳连

- ResNet-50

- ResNet-50 无跳连

实现结束后,我会在一个简单的数据集上训练这4个模型。从实验结果中,我们能直观地看出ResNet中残差连接的作用。

项目链接:https://github.com/SingleZombie/DL-Demos

主要代码在dldemos/ResNet/tf_main.py这个文件里。

模型实现

主要结构

ResNet中有跳连的结构,直接用tf.keras.Sequenctial串行模型不太方便。因此,我们要自己把模型的各模块连起来,对应的TensorFlow写法是这样的:

1 | # Initialize input |

用layers.Input创建一个输入张量后,就可以对这个张量进行计算,并在最后用tf.keras.models.Model把和该张量相关的计算图搭起来。

接下来,我们看看这个output具体是怎么算出来的。

1 | def init_model( |

构建模型时,我们需要给出输入张量的形状。同时,这个函数用model_name控制模型的结构,use_shortcut控制是否使用跳连。

1 | def init_model( |

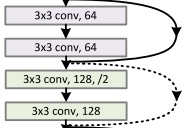

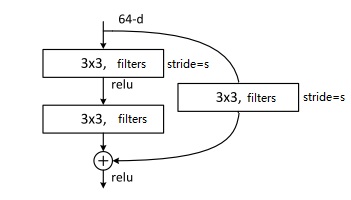

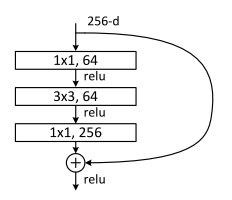

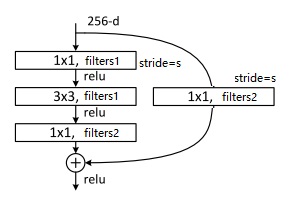

在ResNet中,主要有两种残差块。

第一种是上图中实线连接的,这种残差块的输入输出形状相同,输入可以直接加到激活函数之前的输出上;第二种是上图中虚线连接的,这种残差块输入输出形状不同,需要用一个1x1卷积调整宽高和通道数。

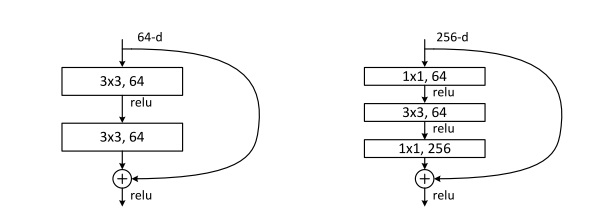

此外,每种残差块用两种实现方式。

第一种实现方式如上图左半部分所示,这样的残差块由两个通道数相同的3x3卷积构成,只有一个需要决定的通道数;第二种实现方式采用了瓶颈(bottlenect)结构,先用1x1卷积降低了通道数,再进行3x3卷积,共有两个要决定的通道数(第1, 2个卷积和第3个卷积的通道数),如上图右半部分所示。

代码中,我用identity_block_2, identity_block_3分别表示输入输出相同的残差块的两种实现,convolution_block_2, convolution_block_3分别表示输入输出不同的残差块的两种实现。这些代码会在下一小节里给出。

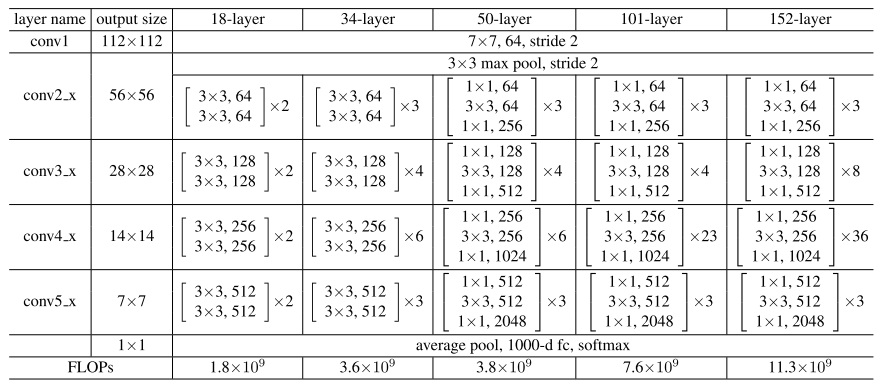

现在,我们来看看该如何用这些模块构成ResNet-18和ResNet-50。首先,我们看一看原论文中这几个ResNet的结构图。

对于这两种架构,它们一开始都要经过一个大卷积层和一个池化层,最后都要做一次平均池化并输入全连接层。不同之处在于中间的卷积层。ResNet-18和ResNet-50使用了实现方式不同且个数不同的卷积层组。

在代码中,开始的大卷积及池化是这样写的:

1 | x = layers.Conv2D(64, 7, (2, 2), padding='same')(input) |

ResNet-18的实现是:

1 | if model_name == 'ResNet18': |

其中,identity_block_2的参数分别为输入张量、卷积核边长、是否使用短路。convolution_block_2的参数分别为输入张量、卷积核边长、输出通道数、步幅、是否使用短路。

ResNet-50的实现是:

1 | elif model_name == 'ResNet50': |

其中,identity_block_3的参数分别为输入张量、卷积核边长、中间和输出通道数、是否使用短路。convolution_block_3的参数分别为输入张量、卷积核边长、中间和输出通道数、步幅、是否使用短路。

最后是计算分类输出的代码:

1 | x = layers.AveragePooling2D((2, 2), (2, 2))(x) |

残差块实现

1 | def identity_block_2(x, f, use_shortcut=True): |

1 | def convolution_block_2(x, f, filters, s: int, use_shortcut=True): |

1 | def identity_block_3(x, f, filters1, filters2, use_shortcut=True): |

1 | def convolution_block_3(x, f, filters1, filters2, s: int, use_shortcut=True): |

这些代码中有一个细节要注意:在convolution_block_3中,stride=2是放在第一个还是第二个卷积层中没有定论。不同框架似乎对此有不同的实现方式。这里是把它放到了第一个1x1卷积里。

实验结果

在这个项目中,我已经准备好了数据集预处理的代码。可以轻松地生成数据集并用TensorFlow训练模型。

1 | def main(): |

为了让训练尽快结束,我只训了20个epoch,且使用的数据集比较小。我在ResNet-18中使用了3000个训练样本,ResNet-50中使用了1000个训练样本。数据的多少不影响对比结果,我们只需要知道模型的训练误差,便足以比较这四个模型了。

以下是我在四个实验中得到的结果。

ResNet-181

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40Epoch 1/20

63/63 [==============================] - 75s 1s/step - loss: 1.9463 - accuracy: 0.5485

Epoch 2/20

63/63 [==============================] - 71s 1s/step - loss: 0.9758 - accuracy: 0.5423

Epoch 3/20

63/63 [==============================] - 81s 1s/step - loss: 0.8490 - accuracy: 0.5941

Epoch 4/20

63/63 [==============================] - 73s 1s/step - loss: 0.8309 - accuracy: 0.6188

Epoch 5/20

63/63 [==============================] - 72s 1s/step - loss: 0.7375 - accuracy: 0.6402

Epoch 6/20

63/63 [==============================] - 77s 1s/step - loss: 0.7932 - accuracy: 0.6769

Epoch 7/20

63/63 [==============================] - 78s 1s/step - loss: 0.7782 - accuracy: 0.6713

Epoch 8/20

63/63 [==============================] - 76s 1s/step - loss: 0.6272 - accuracy: 0.7147

Epoch 9/20

63/63 [==============================] - 77s 1s/step - loss: 0.6303 - accuracy: 0.7059

Epoch 10/20

63/63 [==============================] - 74s 1s/step - loss: 0.6250 - accuracy: 0.7108

Epoch 11/20

63/63 [==============================] - 73s 1s/step - loss: 0.6065 - accuracy: 0.7142

Epoch 12/20

63/63 [==============================] - 74s 1s/step - loss: 0.5289 - accuracy: 0.7754

Epoch 13/20

63/63 [==============================] - 73s 1s/step - loss: 0.5005 - accuracy: 0.7506

Epoch 14/20

63/63 [==============================] - 73s 1s/step - loss: 0.3961 - accuracy: 0.8141

Epoch 15/20

63/63 [==============================] - 74s 1s/step - loss: 0.4417 - accuracy: 0.8121

Epoch 16/20

63/63 [==============================] - 74s 1s/step - loss: 0.3761 - accuracy: 0.8136

Epoch 17/20

63/63 [==============================] - 73s 1s/step - loss: 0.2764 - accuracy: 0.8809

Epoch 18/20

63/63 [==============================] - 71s 1s/step - loss: 0.2698 - accuracy: 0.8878

Epoch 19/20

63/63 [==============================] - 72s 1s/step - loss: 0.1483 - accuracy: 0.9457

Epoch 20/20

63/63 [==============================] - 72s 1s/step - loss: 0.2495 - accuracy: 0.9079

ResNet-18 无跳连

1 | Epoch 1/20 |

ResNet-50

1 | Epoch 1/20 |

ResNet-50 无跳连

1 | Epoch 1/20 |

对比ResNet-18和ResNet-50,可以看出,ResNet-50的拟合能力确实更强一些。

对比无跳连的ResNet-18和ResNet-50,可以看出,ResNet-50的拟合能力反而逊于ResNet-18。这符合ResNet的初衷,如果不加残差连接的话,过深的网络反而会因为梯度问题而有更高的训练误差。

此外,不同模型的训练速度也值得一讲。在训练数据量减少到原来的1/3后,ResNet-50和ResNet-18的训练速度差不多。ResNet-50看上去比ResNet-18多了很多层,网络中间也使用了通道数很大的卷积,但整体的参数量并没有增大多少,这多亏了能降低运算量的瓶颈结构。

总结

在这篇文章中,我展示了ResNet-18和ResNet-50的TensorFlow实现。这份代码包括了经典ResNet中两种残差块的两种实现,完整地复现了原论文的模型模块。同时,经实验分析,我验证了ResNet残差连接的有效性。

未来我还会写一篇ResNet的PyTorch实现,并附上论文的详细解读。