Attention Is All You Need (Transformer) 是当今深度学习初学者必读的一篇论文。但是,这篇工作当时主要是用于解决机器翻译问题,有一定的写作背景,对没有相关背景知识的初学者来说十分难读懂。在这篇文章里,我将先补充背景知识,再清晰地解读一下这篇论文,保证让大多数对深度学习仅有少量基础的读者也能彻底读懂这篇论文。

知识准备 机器翻译,就是将某种语言的一段文字翻译成另一段文字。

由于翻译没有唯一的正确答案,用准确率来衡量一个机器翻译算法并不合适。因此,机器翻译的数据集通常会为每一条输入准备若干个参考输出。统计算法输出和参考输出之间的重复程度,就能评价算法输出的好坏了。这种评价指标叫做BLEU Score。这一指标越高越好。

在深度学习时代早期,人们使用RNN(循环神经网络)来处理机器翻译任务。一段输入先是会被预处理成一个token序列。RNN会对每个token逐个做计算,并维护一个表示整段文字整体信息的状态。根据当前时刻的状态,RNN可以输出当前时刻的一个token。

所谓token,既可以是一个单词、一个汉字,也可能是一个表示空白字符、未知字符、句首字符的特殊字符。

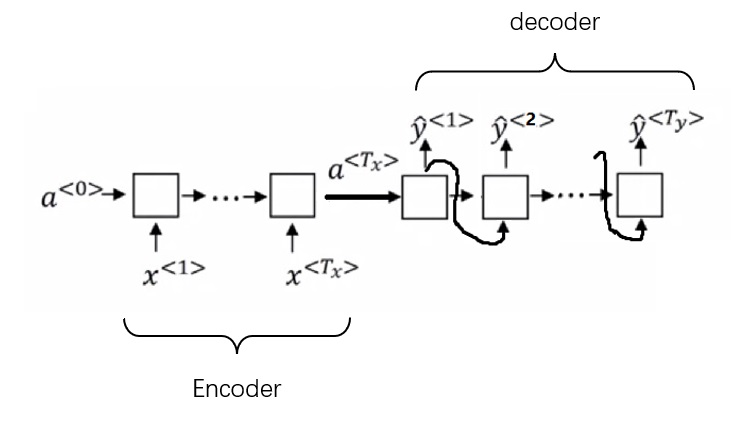

具体来说,在第$t$轮计算中,输入是上一轮的状态$a^{< t - 1 >}$以及这一轮的输入token $x ^{< t >}$,输出这一轮的状态$a^{< t >}$以及这一轮的输出token $y ^{< t >}$。

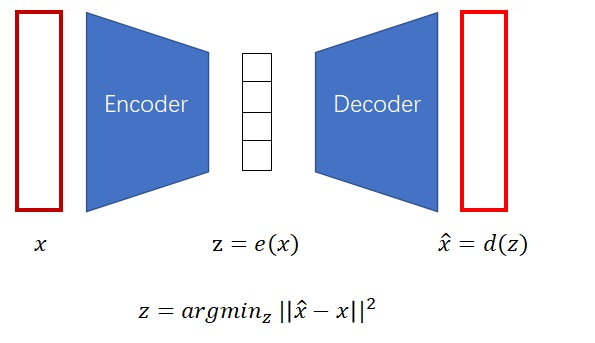

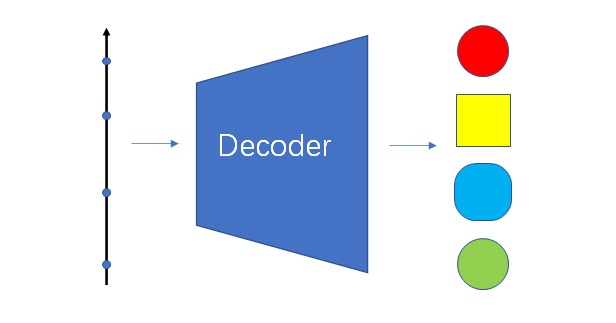

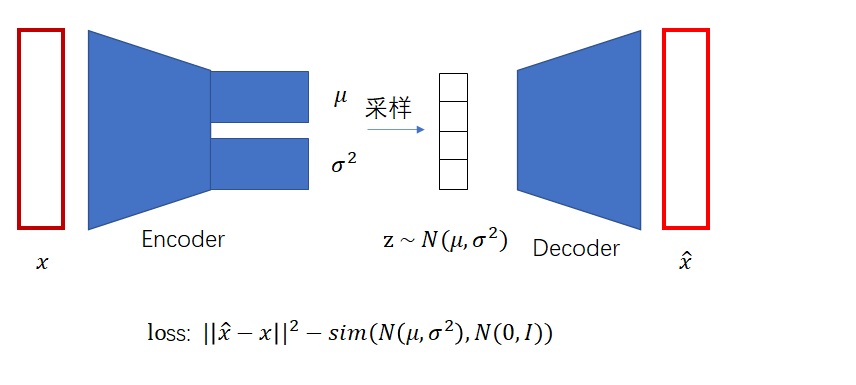

这种简单的RNN架构仅适用于输入和输出等长的任务。然而,大多数情况下,机器翻译的输出和输入都不是等长的。因此,人们使用了一种新的架构。前半部分的RNN只有输入,后半部分的RNN只有输出(上一轮的输出会当作下一轮的输入以补充信息)。两个部分通过一个状态$a^{< T_x >}$来传递信息。把该状态看成输入信息的一种编码的话,前半部分可以叫做“编码器”,后半部分可以叫做“解码器”。这种架构因而被称为“编码器-解码器”架构。

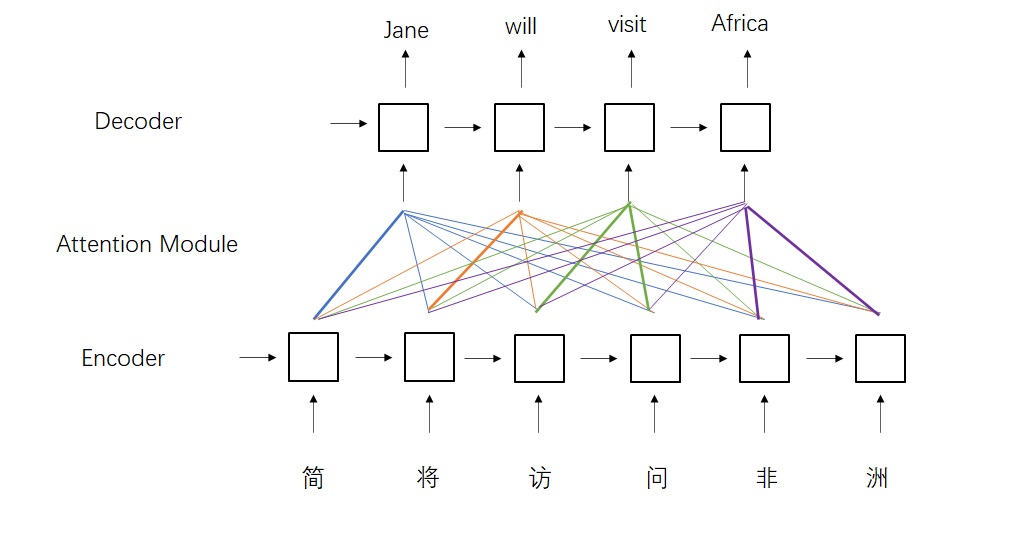

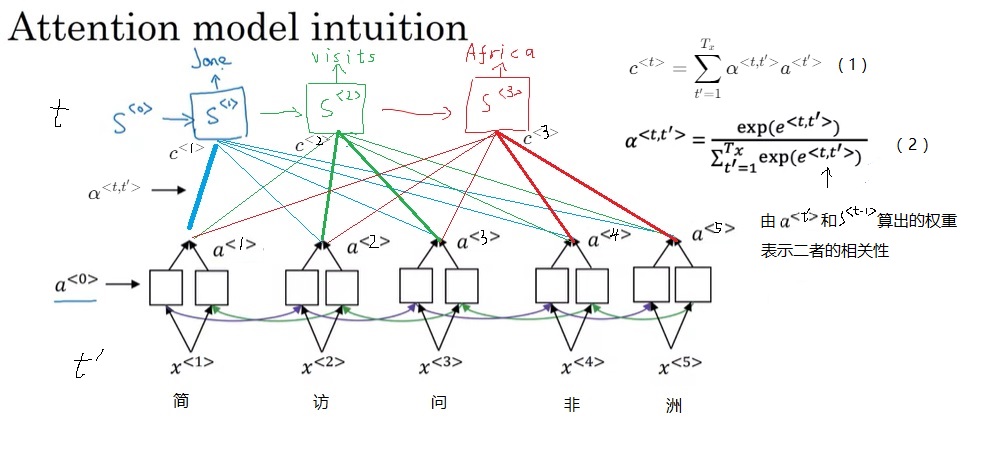

这种架构存在不足:编码器和解码器之间只通过一个隐状态来传递信息。在处理较长的文章时,这种架构的表现不够理想。为此,有人提出了基于注意力的架构。这种架构依然使用了编码器和解码器,只不过解码器的输入是编码器的状态的加权和,而不再是一个简单的中间状态。每一个输出对每一个输入的权重叫做注意力,注意力的大小取决于输出和输入的相关关系。这种架构优化了编码器和解码器之间的信息交流方式,在处理长文章时更加有效。

尽管注意力模型的表现已经足够优秀,但所有基于RNN的模型都面临着同样一个问题:RNN本轮的输入状态取决于上一轮的输出状态,这使RNN的计算必须串行执行。因此,RNN的训练通常比较缓慢。

在这一背景下,抛弃RNN,只使用注意力机制的Transformer横空出世了。

摘要与引言 补充完了背景知识,文章就读起来比较轻松了。

摘要传递的信息非常简练:

摘要并没有解释Transformer的设计动机。让我们在引言中一探究竟。

引言的第一段回顾了RNN架构。以LSTM和GRU为代表的RNN在多项序列任务中取得顶尖的成果。许多研究仍在拓宽循环语言模型和”encoder-decoder”架构的能力边界。

第二段就开始讲RNN的不足了。RNN要维护一个隐状态,该隐状态取决于上一时刻的隐状态。这种内在的串行计算特质阻碍了训练时的并行计算(特别是训练序列较长时,每一个句子占用的存储更多,batch size变小,并行度降低)。有许多研究都在尝试解决这一问题,但是,串行计算的本质是无法改变的。

上一段暗示了Transformer的第一个设计动机:提升训练的并行度。第三段讲了Transformer的另一个设计动机:注意力机制。注意力机制是当时最顶尖的模型中不可或缺的组件。这一机制可以让每对输入输出关联起来,而不用像早期使用一个隐状态传递信息的”encoder-decoder”模型一样,受到序列距离的限制。然而,几乎所有的注意力机制都用在RNN上的。

既然注意力机制能够无视序列的先后顺序,捕捉序列间的关系,为什么不只用这种机制来构造一个适用于并行计算的模型呢?因此,在这篇文章中,作者提出了Transformer架构。这一架构规避了RNN的使用,完全使用注意力机制来捕捉输入输出序列之间的依赖关系。这种架构不仅训练得更快了,表现还更强了。

通过阅读摘要和引言,我们基本理解了Transformer架构的设计动机。作者想克服RNN不能并行的缺点,又想充分利用没有串行限制的注意力机制,于是就提出了一个只有注意力机制的模型。模型训练出来了,结果出乎预料地好,不仅训练速度大幅加快,模型的表现也超过了当时所有其他模型。

接下来,我们可以直接跳到第三章学习Tranformer的结构。

注意力机制 文章在介绍Transformer的架构时,是自顶向下介绍的。但是,一开始我们并不了解Transformer的各个模块,理解整体框架时会有不少的阻碍。因此,我们可以自底向上地来学习Transformer架构。

首先,跳到3.2节,这一节介绍了Transformer里最核心的机制——注意力。在阅读这部分的文字之前,我们先抽象地理解一下注意力机制究竟是在做什么。

注意力计算的一个例子 其实,“注意力”这个名字取得非常不易于理解。这个机制应该叫做“全局信息查询”。做一次“注意力”计算,其实就跟去数据库了做了一次查询一样。假设,我们现在有这样一个以人名为key(键),以年龄为value(值)的数据库:

text 1 2 3 4 5 6 { 张三: 18, 张三: 20, 李四: 22, 张伟: 19 }

现在,我们有一个query(查询),问所有叫“张三”的人的年龄平均值是多少。让我们写程序的话,我们会把字符串“张三”和所有key做比较,找出所有“张三”的value,把这些年龄值相加,取一个平均数。这个平均数是(18+20)/2=19。

但是,很多时候,我们的查询并不是那么明确。比如,我们可能想查询一下所有姓张的人的年龄平均值。这次,我们不是去比较key == 张三,而是比较key[0] == 张。这个平均数应该是(18+20+19)/3=19。

或许,我们的查询会更模糊一点,模糊到无法用简单的判断语句来完成。因此,最通用的方法是,把query和key各建模成一个向量。之后,对query和key之间算一个相似度(比如向量内积),以这个相似度为权重,算value的加权和。这样,不管多么抽象的查询,我们都可以把query, key建模成向量,用向量相似度代替查询的判断语句,用加权和代替直接取值再求平均值。“注意力”,其实指的就是这里的权重。

把这种新方法套入刚刚那个例子里。我们先把所有key建模成向量,可能可以得到这样的一个新数据库:

text 1 2 3 4 5 6 { [1, 2, 0]: 18, # 张三 [1, 2, 0]: 20, # 张三 [0, 0, 2]: 22, # 李四 [1, 4, 0]: 19 # 张伟 }

假设key[0]==1表示姓张。我们的查询“所有姓张的人的年龄平均值”就可以表示成向量[1, 0, 0]。用这个query和所有key算出的权重是:

1 2 3 4 dot([1, 0, 0], [1, 2, 0]) = 1 dot([1, 0, 0], [1, 2, 0]) = 1 dot([1, 0, 0], [0, 0, 2]) = 0 dot([1, 0, 0], [1, 4, 0]) = 1

之后,我们该用这些权重算平均值了。注意,算平均值时,权重的和应该是1。因此,我们可以用softmax把这些权重归一化一下,再算value的加权和。

text 1 2 softmax([1, 1, 0, 1]) = [1/3, 1/3, 0, 1/3] dot([1/3, 1/3, 0, 1/3], [18, 20, 22, 19]) = 19

这样,我们就用向量运算代替了判断语句,完成了数据库的全局信息查询。那三个1/3,就是query对每个key的注意力。

Scaled Dot-Product Attention (3.2.1节) 我们刚刚完成的计算差不多就是Transformer里的注意力,这种计算在论文里叫做放缩点乘注意力(Scaled Dot-Product Attention)。它的公式是:

我们先来看看$Q, K, V$在刚刚那个例子里究竟是什么。$K$比较好理解,$K$其实就是key向量的数组,也就是

text 1 K = [[1, 2, 0], [1, 2, 0], [0, 0, 2], [1, 4, 0]]

同样,$V$就是value向量的数组。而在我们刚刚那个例子里,value都是实数。实数其实也就是可以看成长度为1的向量。因此,那个例子的$V$应该是

text 1 V = [[18], [20], [22], [19]]

在刚刚那个例子里,我们只做了一次查询。因此,准确来说,我们的操作应该写成。

其中,query $q$就是[1, 0, 0]了。

实际上,我们可以一次做多组query。把所有$q$打包成矩阵$Q$,就得到了公式

等等,这个$d_k$是什么意思?$d_k$就是query和key向量的长度。由于query和key要做点乘,这两种向量的长度必须一致。value向量的长度倒是可以不一致,论文里把value向量的长度叫做$d_v$。在我们这个例子里,$d_k=3, d_v=1$。

为什么要用一个和$d_k$成比例的项来放缩$QK^T$呢?这是因为,softmax在绝对值较大的区域梯度较小,梯度下降的速度比较慢。因此,我们要让被softmax的点乘数值尽可能小。而一般在$d_k$较大时,也就是向量较长时,点乘的数值会比较大。除以一个和$d_k$相关的量能够防止点乘的值过大。

刚才也提到,$QK^T$其实是在算query和key的相似度。而算相似度并不只有求点乘这一种方式。另一种常用的注意力函数叫做加性注意力,它用一个单层神经网络来计算两个向量的相似度。相比之下,点乘注意力算起来快一些。出于性能上的考量,论文使用了点乘注意力。

自注意力

自注意力是3.2.3节里提及的内容。我认为,学完注意力的原理后,立刻去学自注意力能够更快地理解注意力机制。当然,论文里并没有对自注意力进行过多的引入,初学者学起来会非常困难。因此,这里我参考《深度学习专项》里的介绍方式,用一个更具体的例子介绍了自注意力。

大致明白了注意力机制其实就是“全局信息查询”,并掌握了注意力的公式后,我们来以Transformer的自注意力为例,进一步理解注意力的意义。

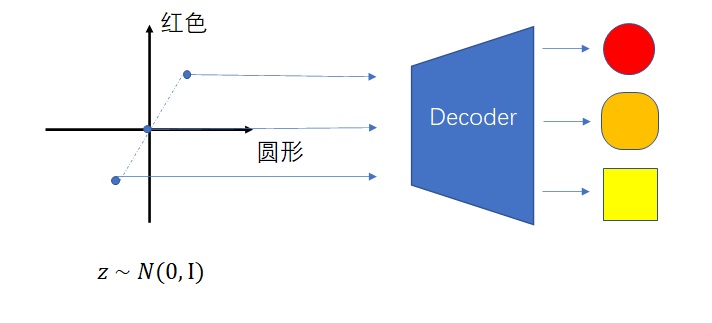

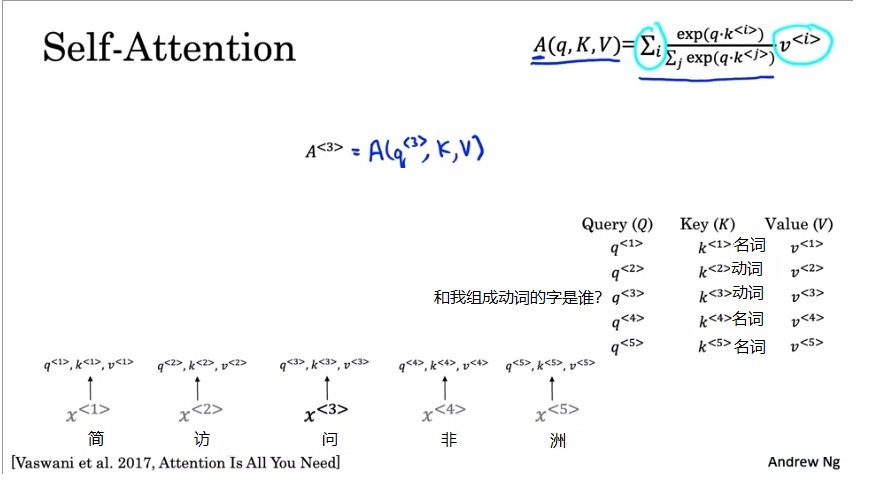

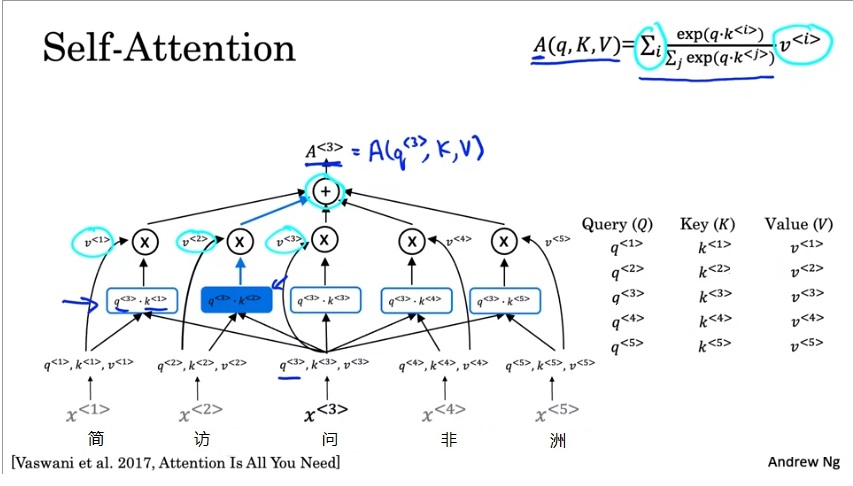

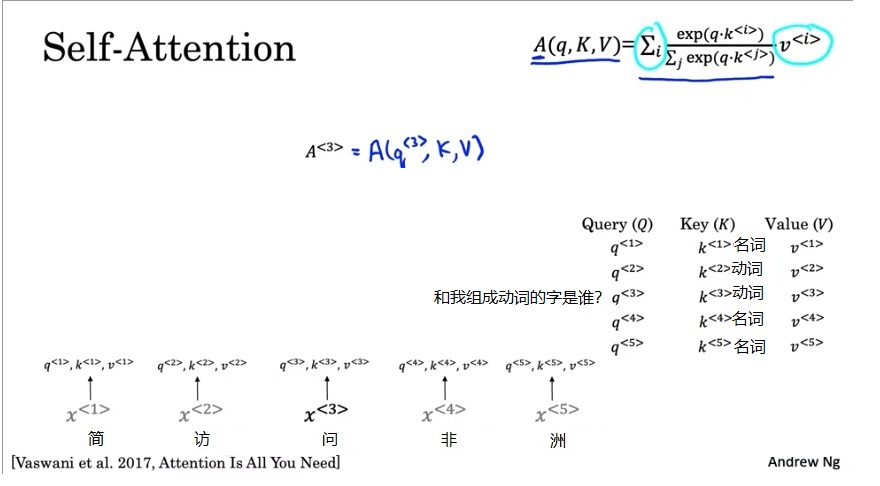

自注意力模块的目的是为每一个输入token生成一个向量表示,该表示不仅能反映token本身的性质,还能反映token在句子里特有的性质。比如翻译“简访问非洲”这句话时,第三个字“问”在中文里有很多个意思,比如询问、慰问等。我们想为它生成一个表示,知道它在句子中的具体意思。而在例句中,“问”字组词组成了“访问”,所以它应该取“询问”这个意思,而不是“慰问”。“询问”就是“问”字在这句话里的表示。

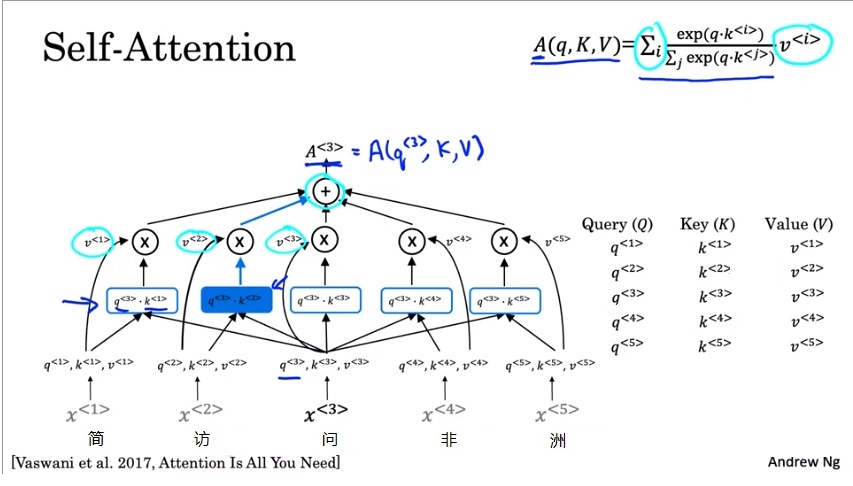

让我们看看自注意力模块具体是怎么生成这种表示的。自注意力模块的输入是3个矩阵$Q, K, V$。准确来说,这些矩阵是向量的数组,也就是每一个token的query, key, value向量构成的数组。自注意力模块会为每一个token输出一个向量表示$A$。$A^{< t >}$是第$t$个token在这句话里的向量表示。

我们先别管token的query, key, value究竟是什么算出来的,后文会对此做解释。

让我们还是以刚刚那个句子“简访问非洲”为例,看一下自注意力是怎么计算的。现在,我们想计算$A^{< 3 >}$。$A^{< 3 >}$表示的是“问”字在句子里的确切含义。为了获取$A^{< 3 >}$,我们可以问这样一个可以用数学表达的问题:“和‘问’字组词的字的词嵌入是什么?”。这个问题就是第三个token的query向量$q^{< 3 >}$。

和“问”字组词的字,很可能是一个动词。恰好,每一个token的key $k^{< t >}$就表示这个token的词性;每一个token的value $v^{< t >}$,就是这个token的嵌入。

这样,我们就可以根据每个字的词性(key),尽量去找动词(和query比较相似的key),求出权重(query和key做点乘再做softmax),对所有value求一个加权平均,就差不多能回答问题$q^{< 3 >}$了。

经计算,$q^{< 3 >}, k^{< 2 >}$可能会比较相关,即这两个向量的内积比较大。因此,最终算出来的$A^{< 3 >}$应该约等于$v^{< 2 >}$,即问题“哪个字和‘问’字组词了?”的答案是第二个字“访”。

这是$A^{< 3 >}$的计算过程。准确来说,$A^{< 3 >}=A(q^{< 3 >}, K, V)$。类似地,$A^{< 1 >}到A^{< 5 >}$都是用这个公式来计算。把所有$A$的计算合起来,把$q$合起来,得到的公式就是注意力的公式。

从上一节中,我们知道了注意力其实就是全局信息查询。而在这一节,我们知道了注意力的一种应用:通过让一句话中的每个单词去向其他单词查询信息,我们能为每一个单词生成一个更有意义的向量表示。

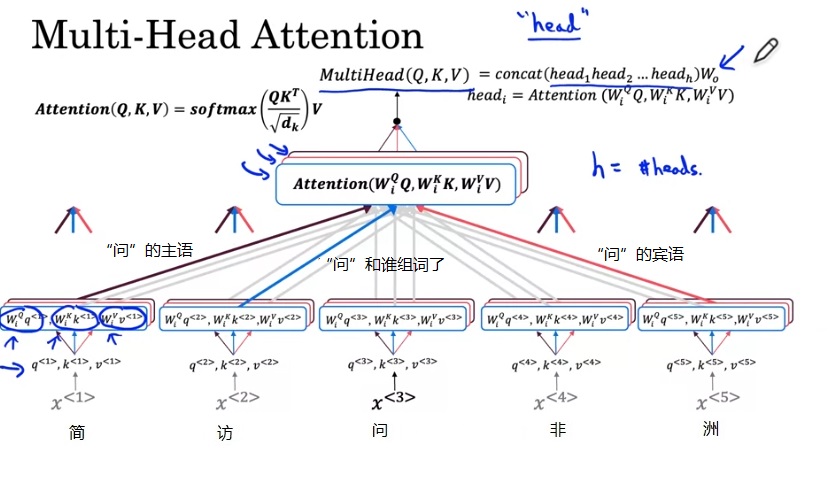

可是,我们还留了一个问题没有解决:每个单词的query, key, value是怎么得来的?这就要看Transformer里的另一种机制了——多头注意力。

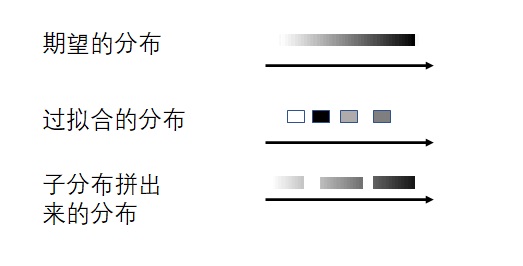

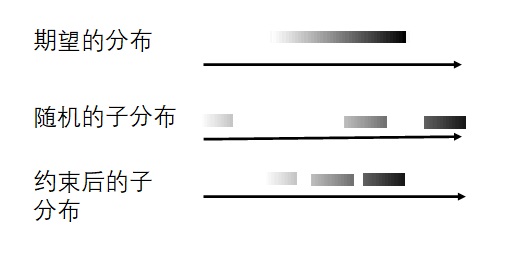

多头注意力 (3.2.2节) 在自注意力中,每一个单词的query, key, value应该只和该单词本身有关。因此,这三个向量都应该由单词的词嵌入得到。另外,每个单词的query, key, value不应该是人工指定的,而应该是可学习的。因此,我们可以用可学习的参数来描述从词嵌入到query, key, value的变换过程。综上,自注意力的输入$Q, K, V$应该用下面这个公式计算:

其中,$E$是词嵌入矩阵,也就是每个单词的词嵌入的数组;$W^Q, W^K, W^V$是可学习的参数矩阵。在Transformer中,大部分中间向量的长度都用$d_{model}$表示,词嵌入的长度也是$d_{model}$。因此,设输入的句子长度为$n$,则$E$的形状是$n \times d_{model}$,$W^Q, W^K$的形状是$d_{model} \times d_k$,$W^V$的形状是$d_{model} \times d_v$。

就像卷积层能够用多个卷积核生成多个通道的特征一样,我们也用多组$W^Q, W^K, W^V$生成多组自注意力结果。这样,每个单词的自注意力表示会更丰富一点。这种机制就叫做多头注意力。把多头注意力用在自注意力上的公式为:

Transformer似乎默认所有向量都是行向量,参数矩阵都写成了右乘而不是常见的左乘。

其中,$h$是多头自注意力的“头”数,$W^O$是另一个参数矩阵。多头注意力模块的输入输出向量的长度都是$d_{model}$。因此,$W^O$的形状是$hd_v \times d_{model}$(自注意力的输出长度是$d_v$,有$h$个输出)。在论文中,Transfomer的默认参数配置如下:

$d_{model} = 512$

$h = 8$

$d_k = d_v = d_{model}/h = 64$

实际上,多头注意力机制不仅仅可以用在计算自注意力上。推广一下,如果把多头自注意力的输入$E$拆成三个矩阵$Q, K, V$,则多头注意力的公式为:

看懂了注意力机制,可以回过头阅读3.1节学习Transformer的整体架构了。

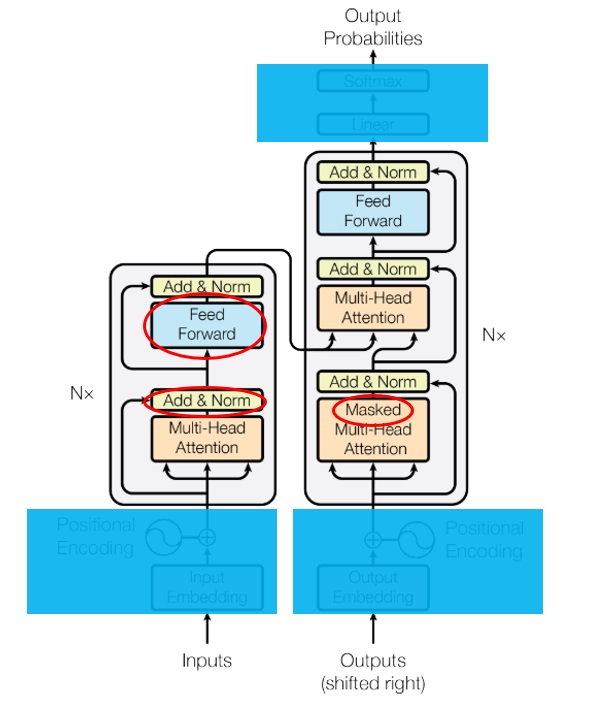

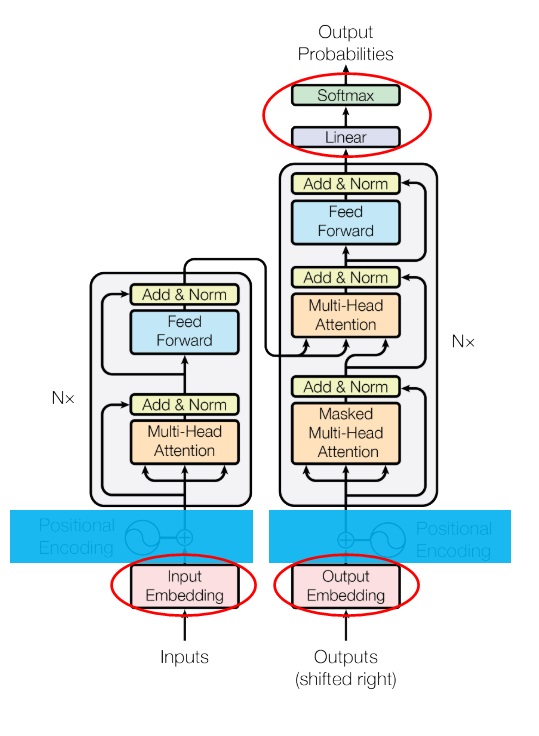

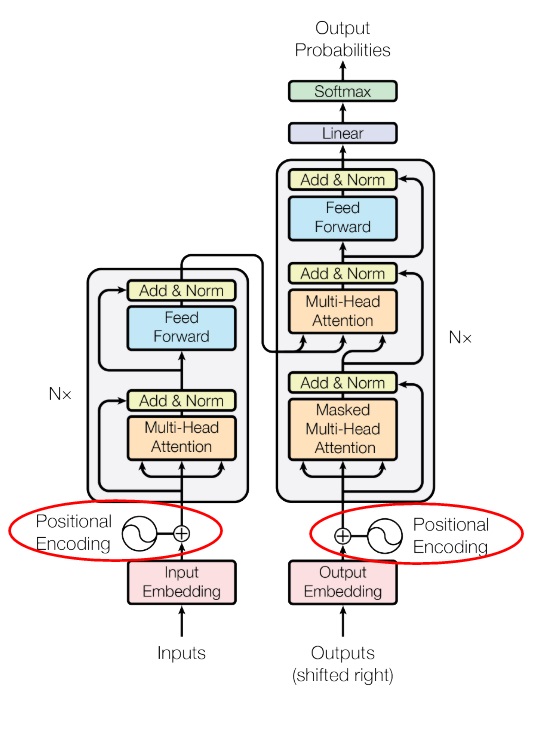

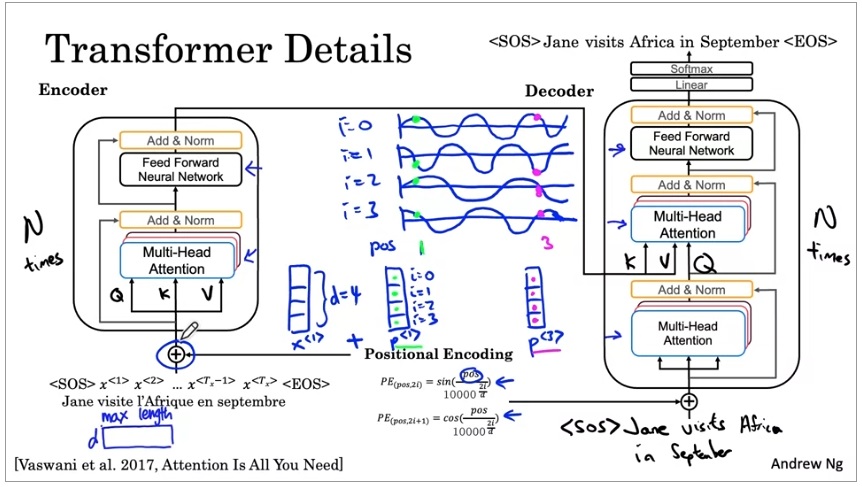

论文里的图1是Transformer的架构图。然而,由于我们没读后面的章节,有一些模块还没有见过。因此,我们这轮阅读的时候可以只关注模型主干,搞懂encoder和decoder之间是怎么组织起来的。

我们现在仅知道多头注意力模块的原理,对模型主干中的三个模块还有疑问:

Add & Norm

Feed Forward

为什么一个多头注意力前面加了Masked

我们来依次看懂这三个模块。

残差连接(3.1节) Transformer使用了和ResNet类似的残差连接,即设模块本身的映射为$F(x)$,则模块输出为$Normalization(F(x)+x)$。和ResNet不同,Transformer使用的归一化方法是LayerNorm。

另外要注意的是,残差连接有一个要求:输入$x$和输出$F(x)+x$的维度必须等长。在Transformer中,包括所有词嵌入在内的向量长度都是$d_{model}=512$。

前馈网络 架构图中的前馈网络(Feed Forward)其实就是一个全连接网络。具体来说,这个子网络由两个线性层组成,中间用ReLU作为激活函数。

中间的隐藏层的维度数记作$d_{ff}$。默认$d_{ff}=2048$。

整体架构与掩码多头注意力 现在,我们基本能看懂模型的整体架构了。只有读懂了整个模型的运行原理,我们才能搞懂多头注意力前面的masked是哪来的。

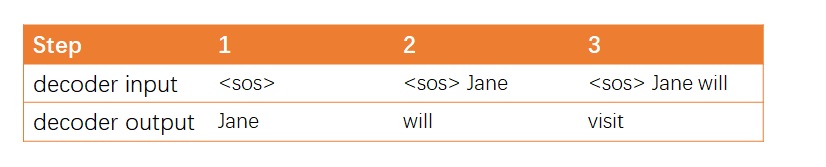

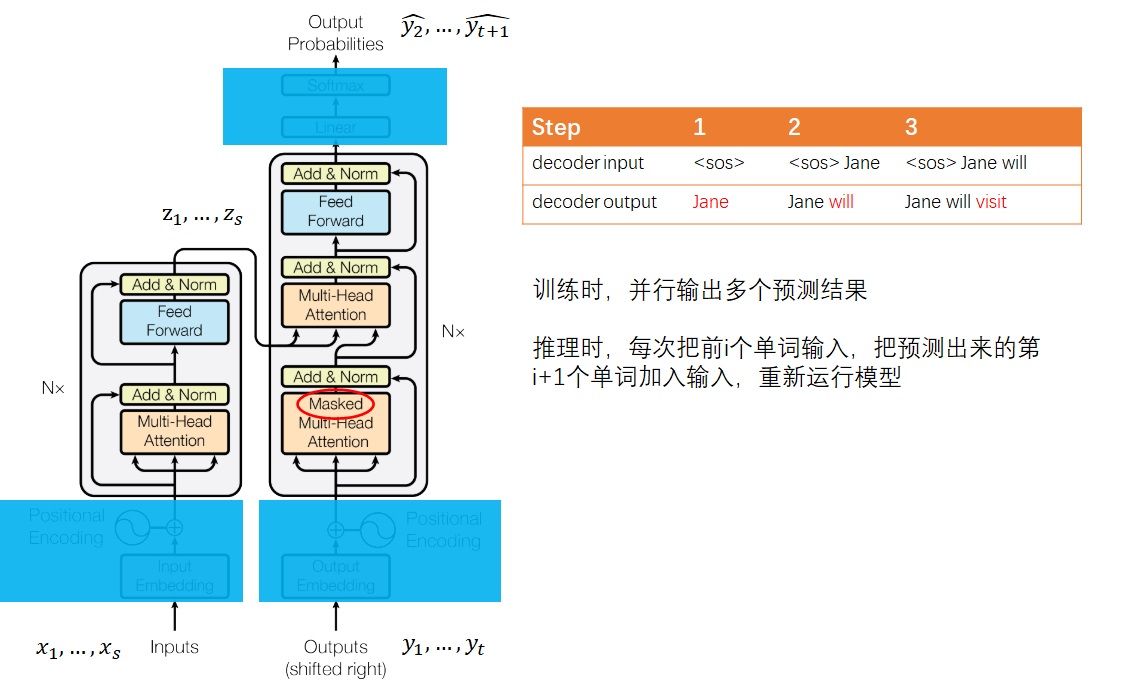

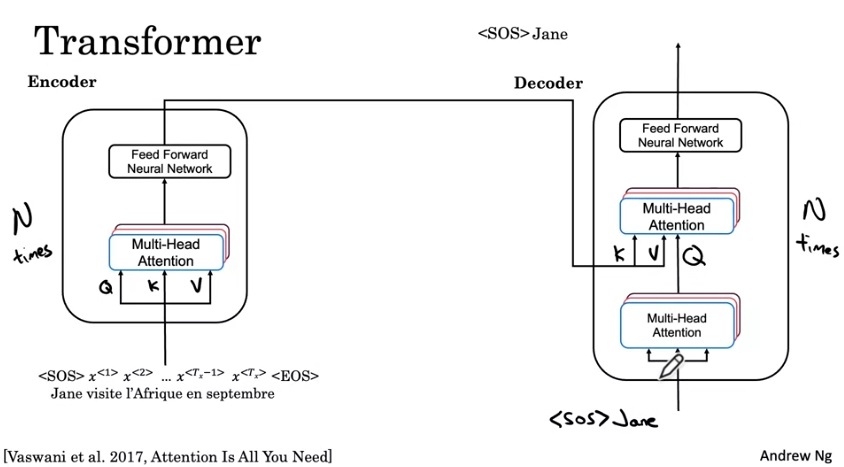

论文第3章开头介绍了模型的运行原理。和多数强力的序列转换模型一样,Transformer使用了encoder-decoder的架构。早期基于RNN的序列转换模型在生成序列时一般会输入前$i$个单词,输出第$i+1$个单词。

而Transformer不同。对于输入序列$(x_1, …, x_s)$,它会被编码器编码成中间表示 $\mathbf{z} = (z_1, …, z_s)$。给定$\mathbf{z}$的前提下,解码器输入$(y_1, …, y_t)$,输出$(y_2, …, y_{t+1})$的预测。

Transformer 默认会并行地输出结果。而在推理时,序列必须得串行生成。直接调用Transformer的并行输出逻辑会产生非常多的冗余运算量。推理的代码实现可以进行优化。

具体来说,输入序列$x$会经过$N=6$个结构相同的层。每层由多个子层组成。第一个子层是多头注意力层,准确来说,是多头自注意力。这一层可以为每一个输入单词提取出更有意义的表示。之后数据会经过前馈网络子层。最终,输出编码结果$\mathbf{z}$。

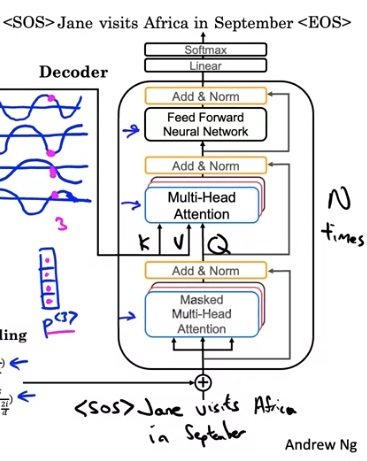

得到了$\mathbf{z}$后,要用解码器输出结果了。解码器的输入是当前已经生成的序列,该序列会经过一个掩码(masked)多头自注意力子层。我们先不管这个掩码是什么意思,暂且把它当成普通的多头自注意力层。它的作用和编码器中的一样,用于提取出更有意义的表示。

接下来,数据还会经过一个多头注意力层。这个层比较特别,它的K,V来自$\mathbf{z}$,Q来自上一层的输出。为什么会有这样的设计呢?这种设计来自于早期的注意力模型。如下图所示,在早期的注意力模型中,每一个输出单词都会与每一个输入单词求一个注意力,以找到每一个输出单词最相关的某几个输入单词。用注意力公式来表达的话,Q就是输出单词,K, V就是输入单词。

经过第二个多头注意力层后,和编码器一样,数据会经过一个前馈网络。最终,网络并行输出各个时刻的下一个单词。

这种并行计算有一个要注意的地方。在输出第$t+1$个单词时,模型不应该提前知道$t+1$时刻之后的信息。因此,应该只保留$t$时刻之前的信息,遮住后面的输入。这可以通过添加掩码实现。添加掩码的一个不严谨的示例如下表所示:

输入

输出

(y1, y2, y3, y4)

y2

(y1, y2, y3, y4)

y3

(y1, y2, y3, y4)

y4

这就是为什么解码器的多头自注意力层前面有一个masked。在论文中,mask是通过令注意力公式的softmax的输入为$- \infty$来实现的(softmax的输入为$- \infty$,注意力权重就几乎为0,被遮住的输出也几乎全部为0)。每个mask都是一个上三角矩阵。

嵌入层 看完了Transformer的主干结构,再来看看输入输出做了哪些前后处理。

和其他大多数序列转换任务一样,Transformer主干结构的输入输出都是词嵌入序列。词嵌入,其实就是一个把one-hot向量转换成有意义的向量的转换矩阵。在Transformer中,解码器的嵌入层和输出线性层是共享权重的——输出线性层表示的线性变换是嵌入层的逆变换,其目的是把网络输出的嵌入再转换回one-hot向量。如果某任务的输入和输出是同一种语言,那么编码器的嵌入层和解码器的嵌入层也可以共享权重。

论文中写道:“输入输出的嵌入层和softmax前的线性层共享权重”。这个描述不够清楚。如果输入和输出的不是同一种语言,比如输入中文输出英文,那么共享一个词嵌入是没有意义的。

嵌入矩阵的权重乘了一个$\sqrt{d_{model}}$。

由于模型要预测一个单词,输出的线性层后面还有一个常规的softmax操作。

位置编码 现在,Transformer的结构图还剩一个模块没有读——位置编码。无论是RNN还是CNN,都能自然地利用到序列的先后顺序这一信息。然而,Transformer的主干网络并不能利用到序列顺序信息。因此,Transformer使用了一种叫做“位置编码”的机制,对编码器和解码器的嵌入输入做了一些修改,以向模型提供序列顺序信息。

嵌入层的输出是一个向量数组,即词嵌入向量的序列。设数组的位置叫$pos$,向量的某一维叫$i$。我们为每一个向量里的每一个数添加一个实数编码,这种编码方式要满足以下性质:

对于同一个$pos$不同的$i$,即对于一个词嵌入向量的不同元素,它们的编码要各不相同。

对于向量的同一个维度处,不同$pos$的编码不同。且$pos$间要满足相对关系,即$f(pos+1) - f(pos) = f(pos) - f(pos - 1)$。

要满足这两种性质的话,我们可以轻松地设计一种编码函数:

即对于每一个位置$i$,用小数点后的3个十进制数位来表示不同的$pos$。$pos$之间也满足相对关系。

但是,这种编码不利于网络的学习。我们更希望所有编码都差不多大小,且都位于0~1之间。为此,Transformer使用了三角函数作为编码函数。这种位置编码(Positional Encoding, PE)的公式如下。

$i$不同,则三角函数的周期不同。同$pos$不同周期的三角函数值不重复。这满足上面的性质1。另外,根据三角函数的和角公式:

$f(pos + k)$ 是 $f(pos)$ 的一个线性函数,即不同的pos之间有相对关系。这满足性质2。

本文作者也尝试了用可学习的函数作为位置编码函数。实验表明,二者的表现相当。作者还是使用了三角函数作为最终的编码函数,这是因为三角函数能够外推到任意长度的输入序列,而可学习的位置编码只能适应训练时的序列长度。

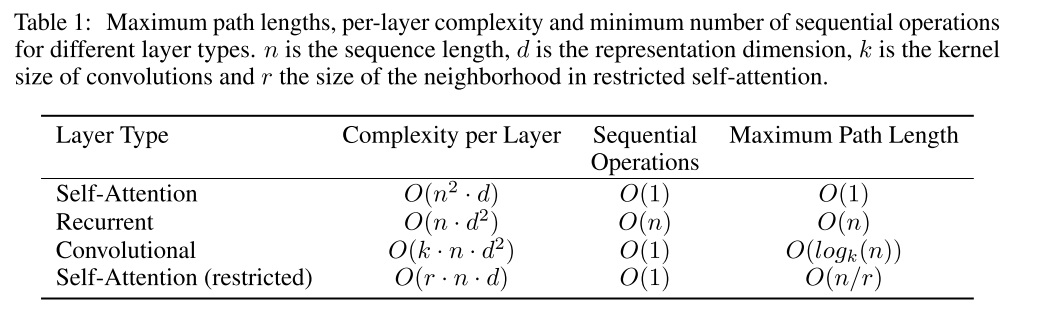

为什么用自注意力 在论文的第四章,作者用自注意力层对比了循环层和卷积层,探讨了自注意力的一些优点。

自注意力层是一种和循环层和卷积层等效的计算单元。它们的目的都是把一个向量序列映射成另一个向量序列,比如说编码器把$x$映射成中间表示$z$。论文比较了三个指标:每一层的计算复杂度、串行操作的复杂度、最大路径长度。

前两个指标很容易懂,第三个指标最大路径长度需要解释一下。最大路径长度表示数据从某个位置传递到另一个位置的最大长度。比如对边长为n的图像做普通卷积操作,卷积核大小3x3,要做$n/3$次卷积才能把信息从左上角的像素传播到右下角的像素。设卷积核边长为$k$,则最大路径长度$O(n/k)$。如果是空洞卷积的话,像素第一次卷积的感受野是3x3,第二次是5x5,第三次是9x9,以此类推,感受野会指数级增长。这种卷积的最大路径长度是$O(log_k(n))$。

我们可以从这三个指标分别探讨自注意力的好处。首先看序列操作的复杂度。如引言所写,循环层最大的问题是不能并行训练,序列计算复杂度是$O(n)$。而自注意力层和卷积一样可以完全并行。

再看每一层的复杂度。设$n$是序列长度,$d$是词嵌入向量长度。其他架构的复杂度有$d^2$,而自注意力是$d$。一般模型的$d$会大于$n$,自注意力的计算复杂度也会低一些。

最后是最大路径长度。注意力本来就是全局查询操作,可以在$O(1)$的时间里完成所有元素间信息的传递。它的信息传递速度远胜卷积层和循环层。

为了降低每层的计算复杂度,可以改进自注意力层的查询方式,让每个元素查询最近的$r$个元素。本文仅提出了这一想法,并没有做相关实验。

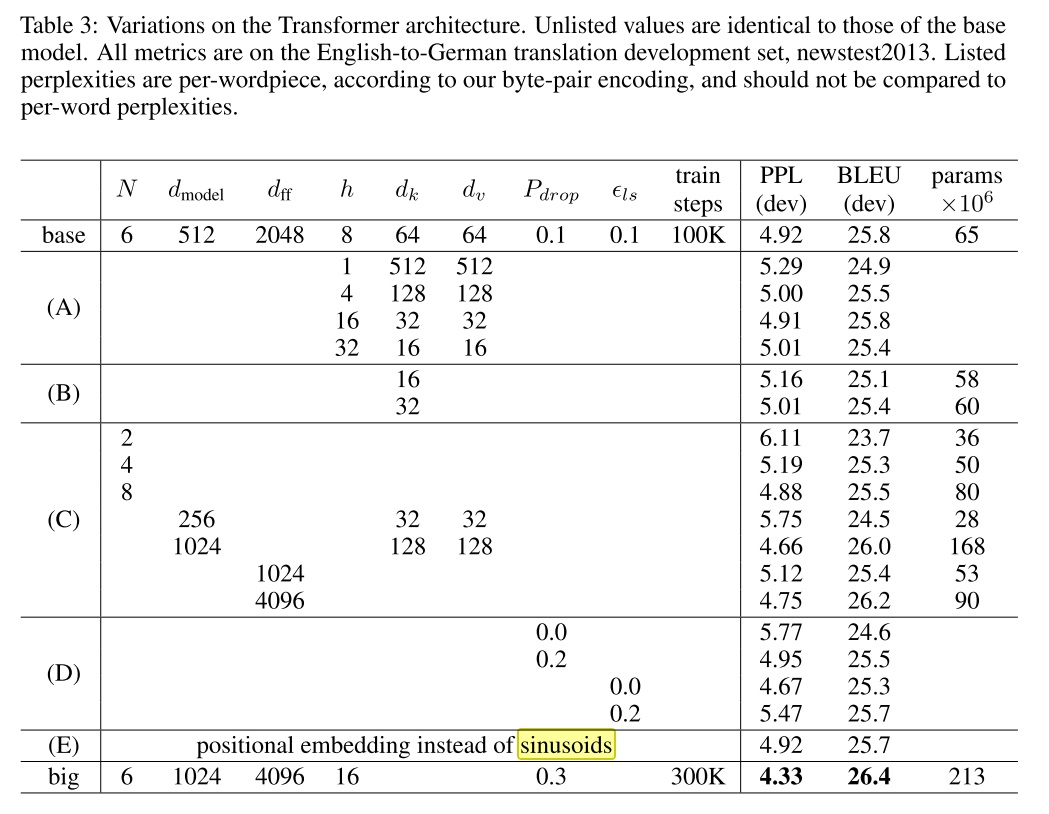

实验与结果 本工作测试了“英语-德语”和“英语-法语”两项翻译任务。使用论文的默认模型配置,在8张P100上只需12小时就能把模型训练完。本工作使用了Adam优化器,并对学习率调度有一定的优化。模型有两种正则化方式:1)每个子层后面有Dropout,丢弃概率0.1;2)标签平滑(Label Smoothing)。Transformer在翻译任务上胜过了所有其他模型,且训练时间大幅缩短。

论文同样展示了不同配置下Transformer的消融实验结果。

实验A表明,计算量不变的前提下,需要谨慎地调节$h$和$d_k, d_v$的比例,太大太小都不好。这些实验也说明,多头注意力比单头是要好的。

实验B表明,$d_k$增加可以提升模型性能。作者认为,这说明计算key, value相关性是比较困难的,如果用更精巧的计算方式来代替点乘,可能可以提升性能。

实验C, D表明,大模型是更优的,且dropout是必要的。

如正文所写,实验E探究了可学习的位置编码。可学习的位置编码的效果和三角函数几乎一致。

总结 为了改进RNN不可并行的问题,这篇工作提出了Transformer这一仅由注意力机制构成的模型。Transformer的效果非常出色,不仅训练速度快了,还在两项翻译任务上胜过其他模型。

作者也很期待Transformer在其他任务上的应用。对于序列长度比较大的任务,如图像、音频、视频,可能要使用文中提到的只关注局部的注意力机制。由于序列输出时仍然避免不了串行,作者也在探究如何减少序列输出的串行度。

现在来看,Transformer是近年来最有影响力的深度学习模型之一。它先是在NLP中发扬光大,再逐渐扩散到了CV等领域。文中的一些预测也成为了现实,现在很多论文都在讨论如何在图像中使用注意力,以及如何使用带限制的注意力以降低长序列导致的计算性能问题。

我认为,对于深度学习的初学者,不管是研究什么领域,都应该仔细学习Transformer。在学Transformer之前,最好先了解一下RNN和经典的encoder-decoder架构,再学习注意力模型。有了这些基础,读Transformer论文就会顺利很多。读论文时,最重要的是看懂注意力公式的原理,再看懂自注意力和多头注意力,最后看一看位置编码。其他一些和机器翻译任务相关的设计可以不用那么关注。